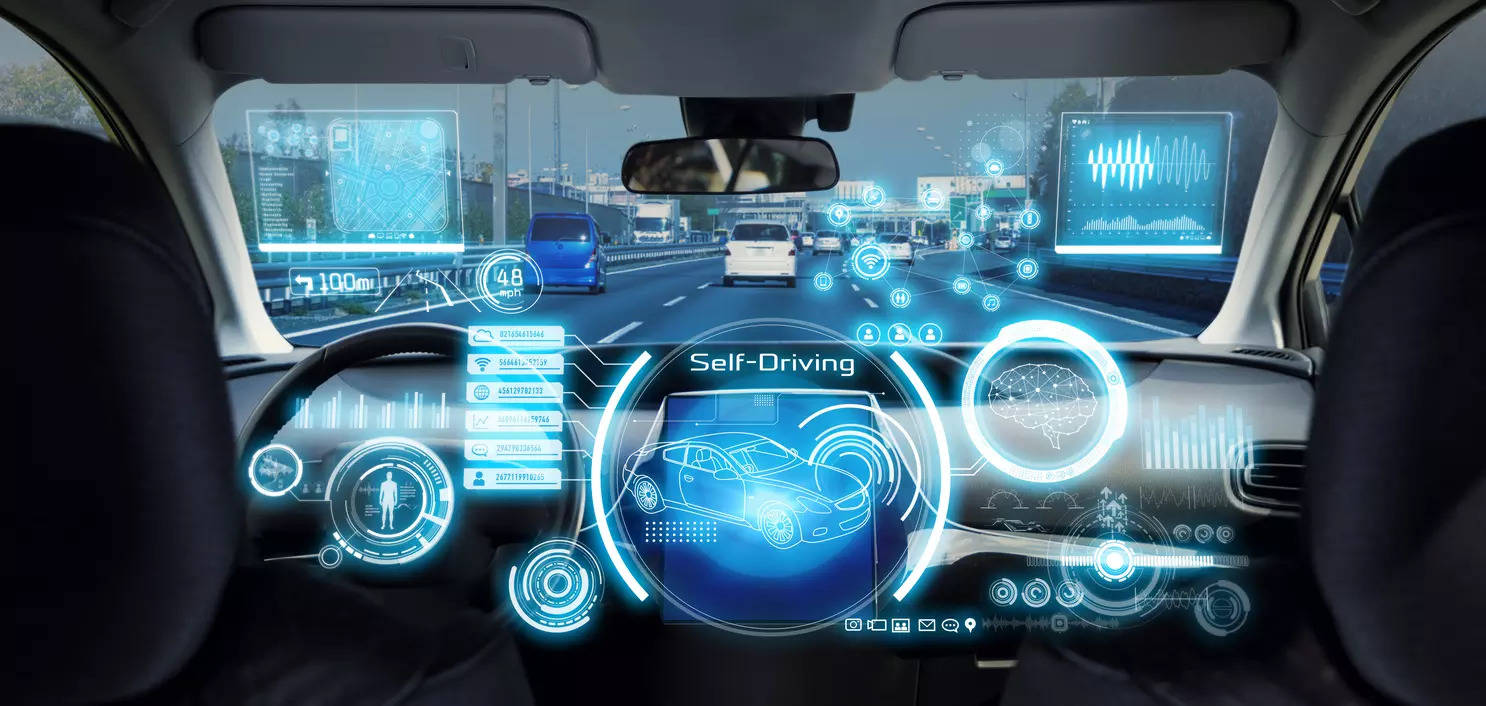

Though the dream of absolutely self-driving vehicles nonetheless belongs to the long run, autonomous automobiles (AVs) are already part of our world. Like different types of AI, integrating this expertise into day by day life requires weighing its professionals and cons.

One of many important advantages of AVs is their potential to foster sustainable transport. They’ll cut back site visitors congestion and reduce the reliance on fossil fuels. Moreover, AVs can improve street security and provide accessible transportation to communities that lack entry, together with these with no driver’s license.

Nevertheless, regardless of these benefits, many individuals stay cautious of absolutely automated AVs.

An Australian examine performed by Sjaan Koppel from Monash College revealed that 42% of individuals would “by no means” use an automatic automobile to move their unaccompanied youngsters. In distinction, a mere 7% indicated they’d “undoubtedly” use one.

The mistrust in AI seems to stem from a worry that machines would possibly make errors or selections that don’t align with human values. This concern is harking back to the 1983 adaptation of Stephen King’s horror movie “Christine,” the place a automobile turns into murderous. Folks fear about being more and more excluded from the decision-making loop of machines.

Automation in automobiles is categorized into ranges, with stage 0 representing ‘no automation’ and stage 5 indicating ‘full driving automation’ the place people are mere passengers.

Presently, shoppers have entry to ranges 0 to 2, whereas stage 3, which gives ‘conditional automation,’ is obtainable in a restricted capability. The second-highest stage, stage 4 or ‘excessive automation,’ is being examined. At present’s AVs require drivers to supervise and intervene when the automation is not sufficient.

To forestall AVs from turning into uncontrollable, AI programmers make the most of a technique referred to as worth alignment. This method turns into significantly essential as automobiles with larger ranges of autonomy are developed and examined.

Worth alignment includes programming the AI to behave in ways in which align with human objectives, which will be achieved explicitly for knowledge-based programs or implicitly via studying inside neural networks.

For AVs, worth alignment would range relying on the automobile’s goal and placement. It could probably take into account cultural values and cling to native legal guidelines and laws, akin to stopping for an ambulance.

The ‘trolley downside’ poses a big problem for AV alignment.

First launched by thinker Philippa Foot in 1967, the trolley downside explores human morals and ethics. When utilized to AVs, it may possibly assist us perceive the complexities of aligning AI with human values.

Think about an automatic automobile heading in direction of a crash. It might swerve proper to keep away from hitting 5 individuals however endanger one particular person as an alternative, or swerve left to keep away from the one particular person however put the 5 in danger.

What ought to the AV do? Which selection greatest displays human values?

Now, take into account a state of affairs the place the AV is a stage 1 or 2 automobile, permitting the driving force to take management. When the AV points a warning, which course would you steer?

Would your resolution change if the selection was between 5 adults and one little one?

What if the one particular person was an in depth member of the family, akin to your mother or dad?

These questions spotlight that the trolley downside was by no means supposed to have a definitive reply.

What this dilemma exhibits is that aligning AVs with human values is intricate.

Think about Google’s mishap with its language mannequin, Gemini. An try at decreasing racism and gender stereotypes resulted in misinformation and absurd outcomes, like Nazi-era troopers depicted as individuals of coloration. Reaching alignment is complicated, and deciding whose values to mirror is equally difficult.

Regardless of these problems, the try to make sure AVs align with human values holds promise.

Aligned AVs might make driving safer. Human drivers typically overestimate their driving abilities. Most automobile accidents are the results of human errors like rushing, distraction, or fatigue.

Can AVs assist us drive extra safely and reliably? Applied sciences like lane-keeping help and adaptive cruise management in stage 1 AVs already help in safer driving.

As AVs more and more populate our roads, it turns into essential to reinforce accountable driving in tandem with this expertise.

Our skill to make efficient selections and drive safely, even with AV help, is essential. Analysis exhibits that people typically over-rely on automated programs, a phenomenon referred to as automation bias. We’re inclined to view expertise as infallible.

The time period ‘Loss of life by GPS’ has gained recognition due to situations the place individuals blindly comply with navigation programs even within the face of clear proof that the expertise is inaccurate.

A notable instance is when vacationers in Queensland drove right into a bay whereas attempting to achieve North Stradbroke Island through their GPS.

The trolley downside illustrates that expertise will be as fallible as people, presumably extra so resulting from its lack of embodied consciousness.

The dystopian worry of AI taking up won’t be as dramatic as imagined. A extra instant risk to AV security could possibly be people’ readiness to relinquish management to AI.

Our uncritical use of AI impacts our cognitive features, together with our sense of course. Which means our driving abilities could degrade as we develop into extra reliant on expertise.

Whereas we’d see Stage 5 AVs sooner or later, the current depends upon human decision-making and our innate skepticism.

Publicity to AV failures can counteract automation bias. Demanding larger transparency in AI decision-making processes may also help AVs increase and even improve human-led street security.

(Supply- PTI)